Why Meta’s Llama 4 is Revolutionizing AI: Outperforming Competitors and Setting New Benchmarks

Meta’s latest offering, Llama 4, is turning heads—and benchmarks—in the world of large language models (LLMs). Designed with cutting‐edge innovations, Llama 4 is not only redefining what open-source AI can achieve but is also challenging established giants like OpenAI’s GPT-4 and Anthropic’s Claude. In this in-depth article, we’ll explore the groundbreaking features of Llama 4, dive into its impressive performance statistics, and see how it stacks up against the competition.

Breaking Down Llama 4: A New Era of Multimodal AI

Meta’s Llama 4 marks a significant leap forward by blending multimodal inputs (text, image, and video) with a state-of-the-art Mixture-of-Experts (MoE) architecture. This approach allows the model to activate only the most relevant parts of its network for any given task—resulting in more efficient computations while delivering high-quality outputs.

The Llama 4 family comprises three main models:

-

Llama 4 Scout

-

Active Parameters: 17 billion

-

Total Parameters: 109 billion

-

Experts: 16

-

Context Window: Up to 10 million tokens

-

Key Strength: Optimized for long-context tasks and multimodal input, Scout fits on a single NVIDIA H100 GPU when heavily quantized.

-

-

Llama 4 Maverick

-

Active Parameters: 17 billion

-

Total Parameters: 400 billion

-

Experts: 128

-

Context Window: Up to 1 million tokens

-

Key Strength: Maverick is engineered for general-purpose tasks with enhanced coding, reasoning, and image understanding abilities—making it a strong all-around performer.

-

-

Llama 4 Behemoth (In development)

-

Active Parameters: 288 billion (approximate)

-

Total Parameters: Nearly 2 trillion

-

Experts: 16

-

Key Strength: Positioned as a teacher model, Behemoth is expected to drive future performance improvements across the Llama 4 ecosystem.

-

Meta’s commitment to open-source and accessible AI research is evident in these models. By offering powerful tools under terms that encourage adaptation and further innovation, Meta is democratizing AI and challenging the closed ecosystems upheld by some competitors.

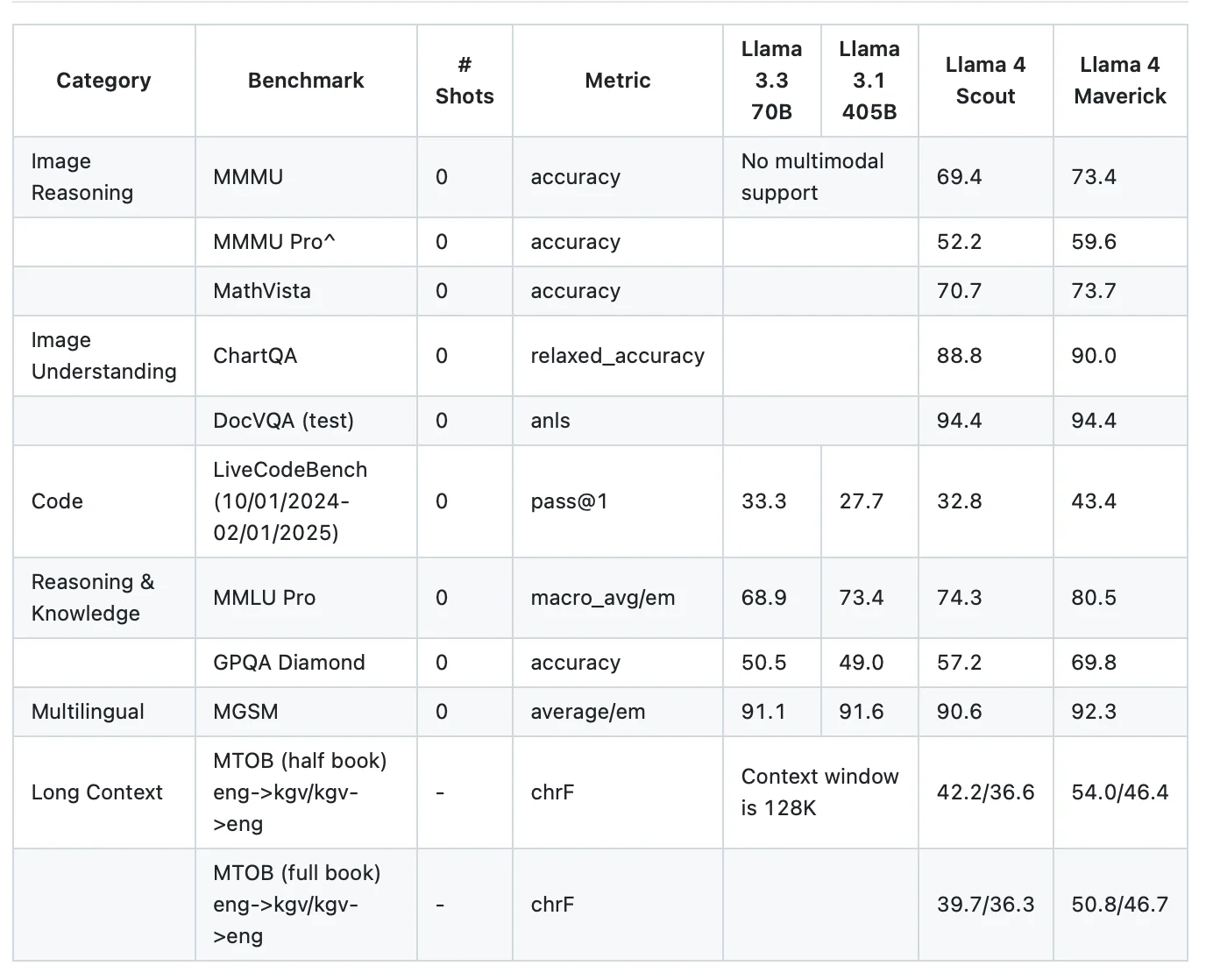

Outperforming the Competition: Benchmark Insights

Recent internet research and benchmark comparisons suggest that Llama 4 is delivering impressive results across multiple categories. For instance, despite having only 17 billion active parameters like its lighter siblings, both Scout and Maverick have demonstrated competitive performance on reasoning, math, and coding benchmarks. Some reports indicate that—in certain tests—Llama 4 even outperforms premium models such as OpenAI’s GPT-4 on the LMArena benchmark.

Below is a simplified table summarizing key benchmark metrics for Llama 4 Scout compared with other prominent models:

| Benchmark | Llama 4 Scout | GPT-4 (Reported) | Claude 3.5 Sonnet |

|---|---|---|---|

| MMLU | ~88.3% (est.) | ~86.4% (varies) | 90.4% (reported) |

| MMMU | 69.4% | – | – |

| HumanEval | – | – | 92% (reported)* |

| Math | – | – | 71.1% (when benchmarked)* |

| Context Window | Up to 10M tokens | 8K tokens (standard) | 200K tokens |

*Note: Not all benchmarks are available for each model; numbers are drawn from multiple independent test reports and Meta's internal data shared publicly.

Llama 4’s extended context window is a game changer. While most competitors are limited to processing a few thousand tokens per interaction, Scout can analyze documents equivalent to an entire encyclopedia—opening up novel applications in legal, academic, and enterprise settings.

Why Llama 4 Matters: The Bigger Picture

Meta’s Llama 4 is more than just an incremental model upgrade—it represents a strategic shift in the AI landscape. Here’s why:

-

Enhanced Multimodal Capabilities: By natively supporting text, images, and video in a unified architecture, Llama 4 enables richer interactions and more creative applications. Developers can build AI systems that synthesize information from multiple sources seamlessly.

-

Unparalleled Context Length: The 10-million-token context window of Llama 4 Scout allows for deep, long-form analysis that is unprecedented in open-source models. This is ideal for tasks like summarization of lengthy reports, codebase analysis, or detailed conversation histories.

-

Resource Efficiency through MoE: The Mixture-of-Experts design means Llama 4 activates only relevant "experts" per token, which boosts compute efficiency and performance. This is not only cost-effective but also makes high-quality AI more accessible.

-

Open-Source Innovation: By maintaining an open access philosophy, Meta empowers developers, researchers, and businesses to innovate faster and customize models to fit diverse needs—breaking barriers imposed by proprietary systems.

What This Means for the Future of AI

Meta’s Llama 4 is setting a new standard for AI performance, efficiency, and openness. With industry giants racing to scale up their models, Meta’s approach of leveraging open-source principles with powerful technical innovations could recalibrate how future AI systems are developed and deployed. As more enterprises integrate Llama 4 into their products—whether it’s for enhanced customer support bots, intelligent document analytics, or creative assistants—the overall quality and accessibility of AI will continue to improve.

Final Thoughts

Meta’s bold advancements in Llama 4 are a reminder that innovation in AI is as much about rethinking architecture and efficiency as it is about scaling parameter counts. By combining an extended context window, multimodal capabilities, and a resource-conscious MoE design, Llama 4 is not only beating competitors on specific benchmarks but is also paving the way for practical, real-world applications that were previously out of reach for open-source models.

Whether you’re a developer eager to build the next wave of AI-powered applications or a business leader looking to harness the power of cutting-edge AI with minimal overhead, Llama 4 represents a significant, democratizing break

Setting Up an Nginx Server with PHP, MySQL, and PhpMyAdmin: A Comprehensive Guide

Author: Suparva - 2 minute

Introduction Nginx is a powerful and efficient web server known for its high performance and low resource consumption. Combined with PHP and MySQL, it forms a robust stack for serving dynamic web applications. ...

MoreExploring IndexedDB: A Deep Dive into Browser Storage and Alternatives

Author: Suparva - 2 minutes 5 seconds

Introduction In the modern web ecosystem, managing client-side data efficiently is essential. IndexedDB is one of the powerful storage solutions available in browsers, providing a way to store significant amo...

MoreMastering AI: The Ultimate Guide to Using AI Models for Success in Work & Life

Author: Suparva - 2 minutes 35 seconds

Artificial Intelligence (AI) is no longer a futuristic concept; its a present-day reality transforming the way we work and live. As a 16-year-old navigating this dynamic landscape, you might feel both excited and ove...

MoreUnlocking the Future of AI: A Deep Dive into MCP Servers

Author: Suparva - 3 minutes 21 seconds

In todays rapidly evolving AI landscape, the ability to provide precise and timely context to large language models (LLMs) is more important than ever. One groundbreaking innovation that addresses this need is the Mod...

MoreVibe Coding: Revolutionizing Software Development with AI-Powered Programming

Author: Suparva - 2 minutes 29 seconds

In the ever-evolving landscape of technology, a groundbreaking paradigm known as vibe coding has emerged, redefining the art of software development. This innovative approach harnesses the power of artificial intelli...

More